Best Practices for Securing your HPC Cloud - Part I

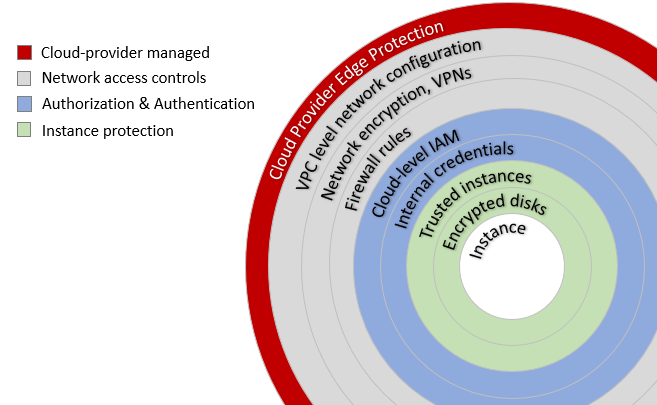

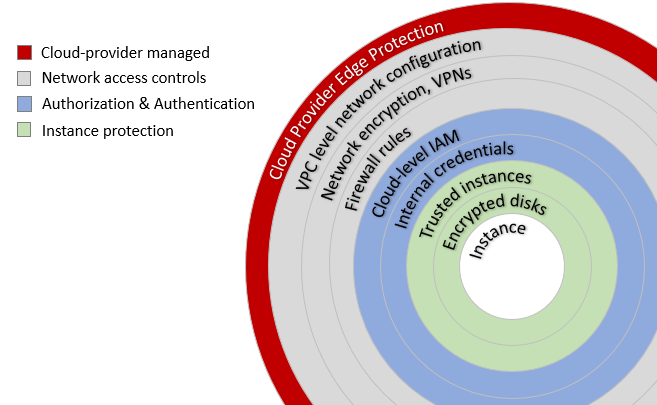

As HPC workloads find their way to the cloud, HPC users increasingly face security-related concerns similar to their corporate counterparts. The good news is that most cloud providers provide a wealth of tools and facilities to help secure cloud and hybrid-cloud cluster deployments. A good way to think about security is imagining a series of layered defenses. If a malicious actor can breach one layer, ideally the breach should be detectable, and other layered defenses should keep applications and data safe. There are many companies that could help with network monitoring if you would like to find more information here.

To frame a cloud security discussion specific to HPC, we borrow from a visual created by Cohesive Networks in their 2016 blog. We've drawn the layers a little differently to emphasize topics of interest to administrators deploying cloud-based or hybrid-cloud HPC clusters. In this three-part article, we'll walk our way through the layers pictured above.

In this first article, we'll focus on perimeter and network security. In Part 2, we'll discuss considerations around identity & access management (IAM) and suggest best practices to keep clouds secure. In Part 3, we'll cover addition measures to help secure instances and applications.

To make our examples concrete, we'll discuss security in the context of Amazon Web Services (AWS). The same concepts apply to other major public cloud providers also, but some of the tools and terminology are different. We'll start with perimeter-level protections provided by the cloud service provider.

If you're running a private HPC cluster in the cloud accessed by a closed community, DDoS attacks are probably not a big concern. If your HPC environment is presenting a public interface like a website, portal, or API as many increasingly do, DDoS attacks can pose a threat to your environment.

The good news is that most cloud providers offer robust protection against DDoS attacks. In AWS, customers benefit from automatic protections of AWS Shield Standard included at no charge for customers consuming AWS cloud services. This basic level of protection will be sufficient for many HPC users.

For HPC users building high-traffic, publicly accessible sites and using services like AWS Elastic Load Balancing (ELB), Amazon CloudFront, Amazon Route 53, or the AWS API Gateway to deploy a scalable API, AWS provides a whitepaper titled AWS Best Practices for DDoS Resiliency providing details on various DDoS mitigation techniques.

Virtual Private Clouds can use either IPv4 or IPv6 IP addressing with classless inter-domain routing (CIDR). For illustration, I can configure a VPC in a region with an IPv4 CIDR block such as 172.31.0.0/16 (having up to 65534 IP addresses) where the first two bytes specify the network. If I have multiple HPC clusters, I might divide my VPC into up to 16 separate subnets (in this example) each with up to 4,094 IP addresses. For readers familiar with CIDR, I can do this by creating subnets 172.31.0.0/20, 172.31.16.0/20, 172.31.32.0/20, etc.)

For each VPC or subnet, I can configure details such as:

In production environments, users may deploy multiple clusters on separate VPCs or subnets. It's worth taking some time to think about basic questions like whether each cluster needs internet access, and whether users on one cluster need access to other clusters different VPCs or subnets. The more you can limit access to individual VPCs or subnets; the more secure the environment will be.

Clusters will also be more secure if you don't associate an internet gateway with a VPC or subnet, but cluster users often need access to the public internet for legitimate reasons. A good fallback position can be to enable outbound traffic only using an egress-only internet gateway (for IPv6 traffic) or a NAT gateway (for IPv4 traffic). NAT gateways allow machine instances on a private subnet to connect to the internet or other AWS services but prevent actors on the internet from initiating a connection back to instances attached to your VPC. Once a NAT gateway is created, you can update the routing tables associated with VPCs or subnets to route traffic through the NAT gateway rather than the internet gateway supplied by default.

Consider segmenting different groups on separate subnets or VPCs. For common services needed by multiple clusters (license servers, Navops Launch server, shared file servers, etc.) consider putting these on their own VPC with no associated internet gateway reachable only by nodes on the VPCs or subnets that need to connect to them.

VPC Peering is another feature that may be useful to cluster administrators. VPC peering allows administrators to connect two VPCs with different IP addressing schemes and allow instances on each VPC to communicate as if they were on the same network to share data or for other purposes. VPC peering can be configured across AWS data centers or multiple AWS accounts.

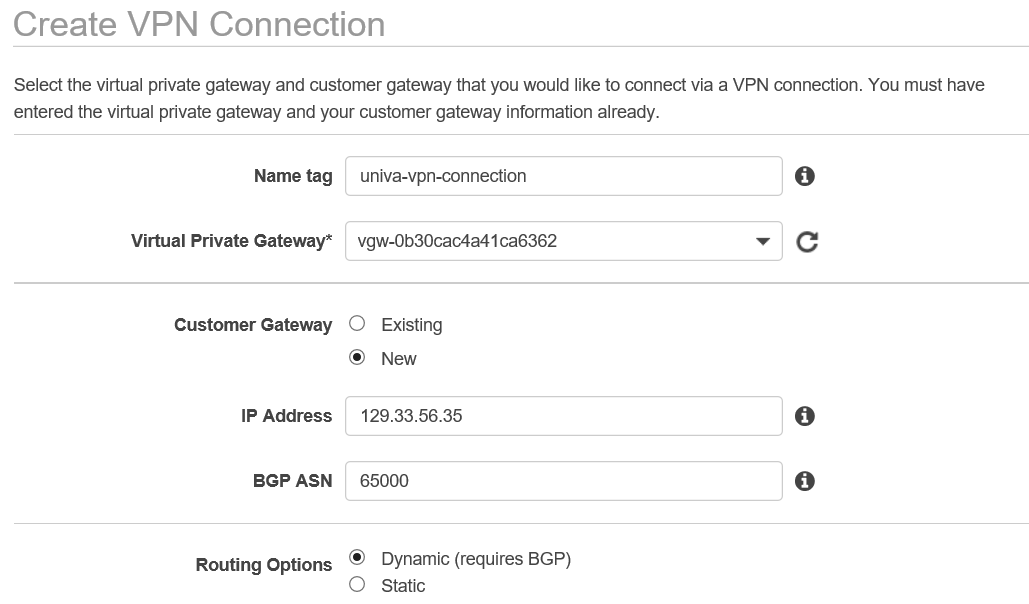

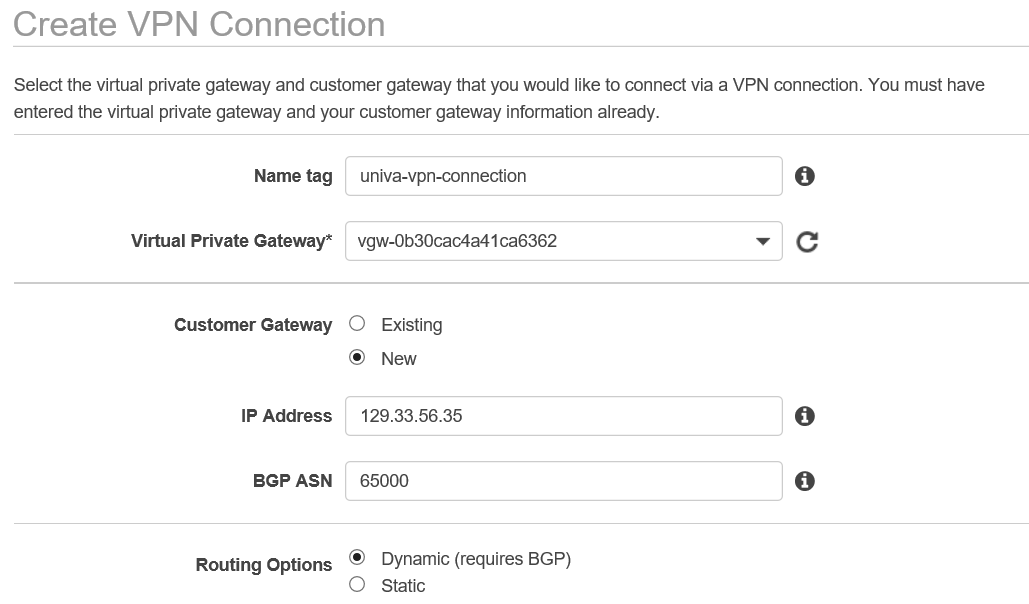

AWS offers a variety of VPN solutions. With an AWS managed VPN a virtual private gateway is created and associated with a VPC. The virtual private gateway is paired with a customer gateway which is either a physical appliance or software in your local data center that is internet accessible via a static IP address or behind a NAT device. Those who are looking for virtual private networks might want to look at best vpn canada to help keep their network protected. A customer gateway resource is created in AWS that points to the on-premise gateway and routing tables are configured to allow traffic to flow through the VPN. The process for creating a VPN via the AWS web console (after creating the virtual private gateway and associating it with a VPC_is shown below:

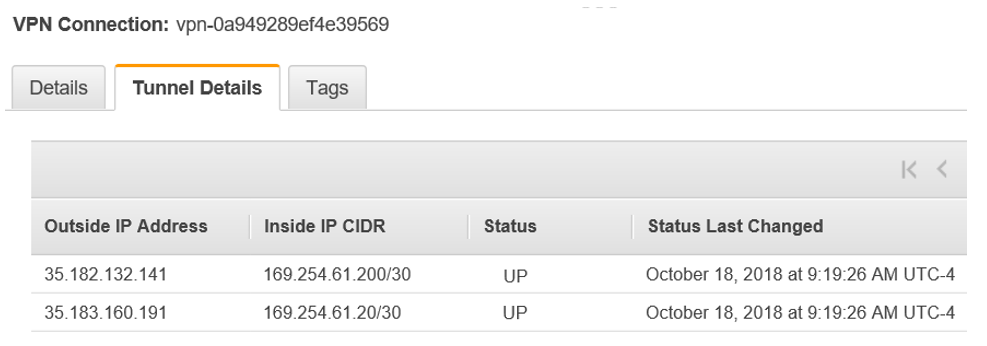

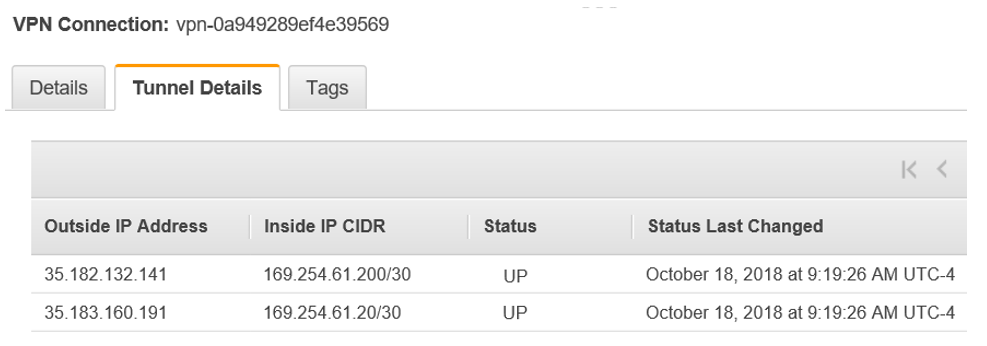

Once you specify details like the ID of the virtual private gateway and address for the on-premise customer gateway, AWS will create a VPN connection as shown below automatically provide two internet accessible IP addresses as well as internal IP addresses for the tunnel endpoints.

Once the VPN tunnel is established, IPSec secured connections can be initiated from the customer gateway to cluster nodes attached to the VPC. Packets are automatically encrypted as they leave your data center and decrypted at the cloud end of the tunnel (and vice versa). Using VPN tunnels for network encryption with appropriate on-premise firewalls and appropriately secured VPCs and subnets helps secure your cluster from threats originating both inside and outside the cloud providers network. There are lots of different VPN reviews available by sites similar to makeawebsitehub so it's not going to be hard to find a solution that is suitable for you and your needs.

AWS Direct Connect is another way that customers can create a direct connection from their local data center to AWS (bypassing internet service providers) allowing secure communication via a standard Ethernet fiber-optic cable providing 1 Gbps or 10 Gbps transfer speeds.

In the cloud, firewalls are virtual constructs, implemented at the level of a virtual private cloud (VPC) controlling what traffic can enter and exit from each machine instance attached to the VPC.

Earlier we talked about how Network ACLs control what traffic enters or leaves a VPC or cloud subnet. Security Groups are similar, but these define firewall policies controlling traffic to and from a VPC at the instance level. Security Groups provide another layer of defense above and beyond VPC or subnet-level Network ACLs.

In HPC environments there may be tens or even hundreds of machine instances. Configuring firewall policies for each node would be tedious. Fortunately, a Security Group can be defined once and applied to multiple machine instances.

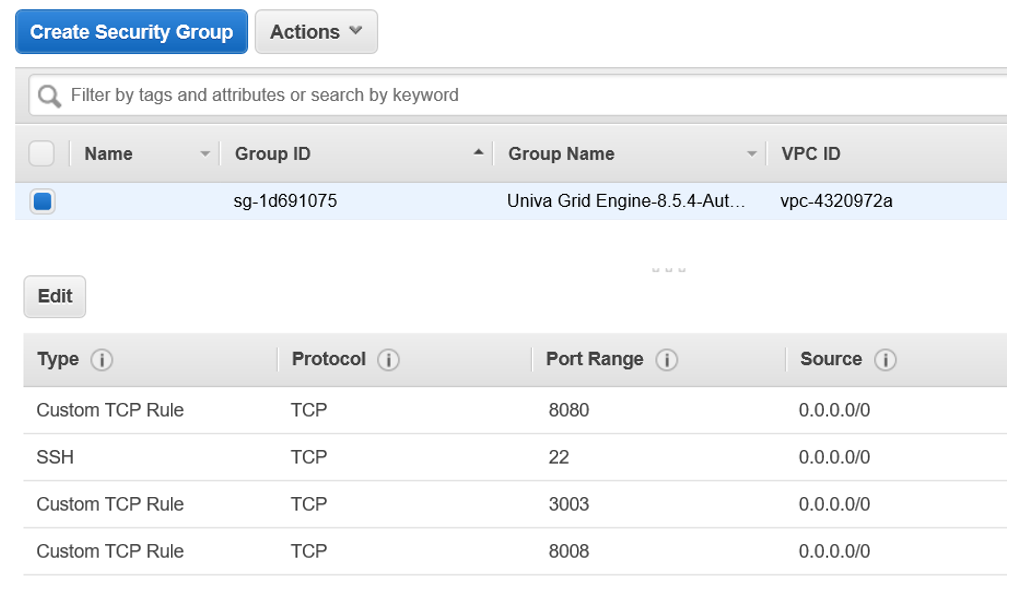

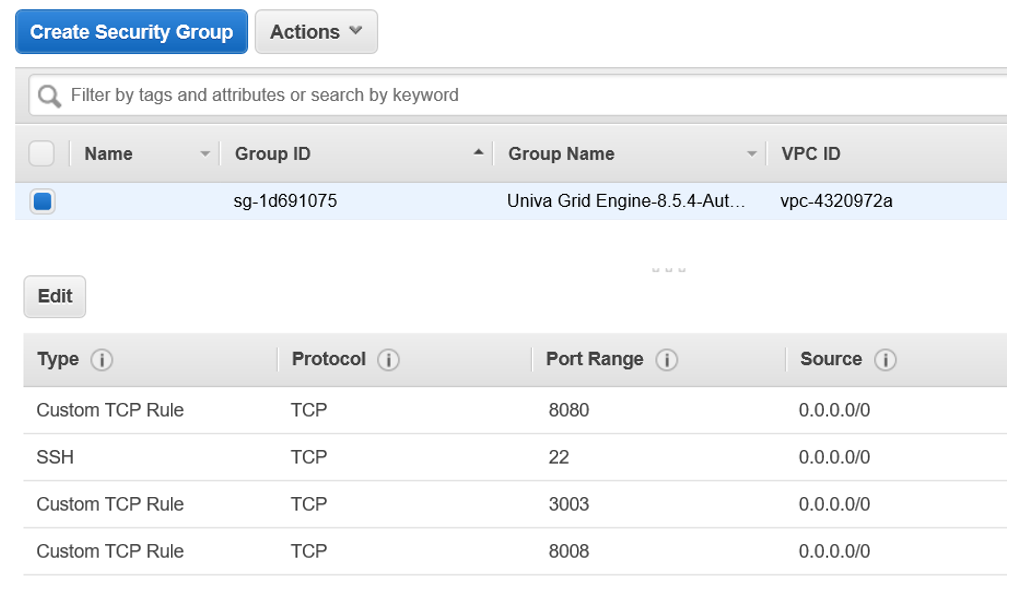

A sample Security Group for a Altair Grid Engine cluster is shown below. The Security Group is associated with the VPC that the cluster machine instances attach to and defines inbound and outbound rules for network traffic.

Applying a security group to cluster nodes makes the environment more secure because cluster nodes will only accept traffic on ports that are specifically authorized. For example, we allow external connections on port 22 for SSH, port 8080 for the Web GUI and a few other ports required for specific services. The ports you open will depend on your application environment, but in general, it is a good idea only to open ports that need to be open.

Administrators can make the environment even more secure by having different security groups applied to different types of cluster nodes. For example, head-nodes or master hosts might have different firewall policies than visualization nodes or compute hosts because different types of hosts expose different services.

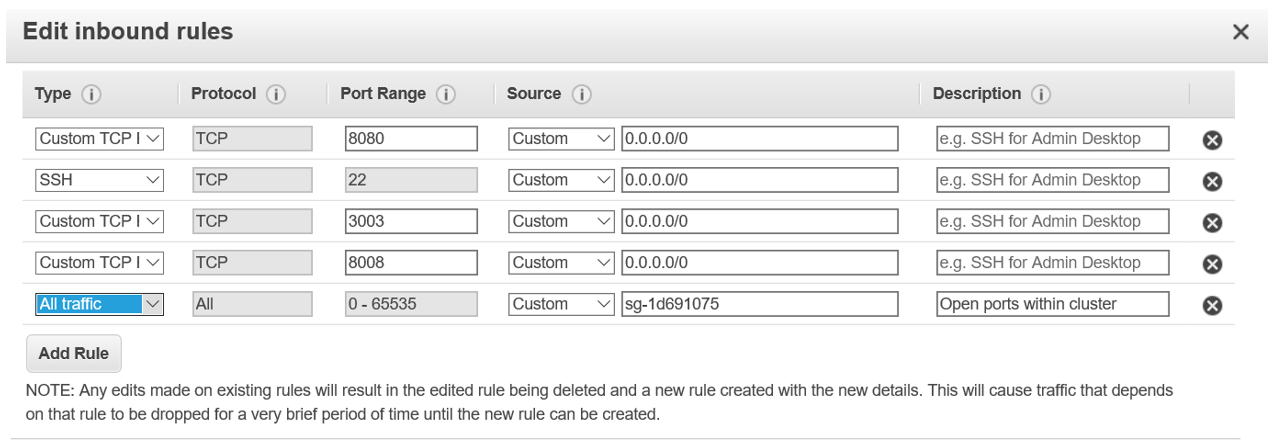

A challenge with HPC clusters running workload management software (like Altair Grid Engine, MPI, NFS or other distributed applications is that open communication may be required between cluster nodes. Some applications negotiate ports to communicate on dynamically at runtime, so port numbers are not always knowable in advance.

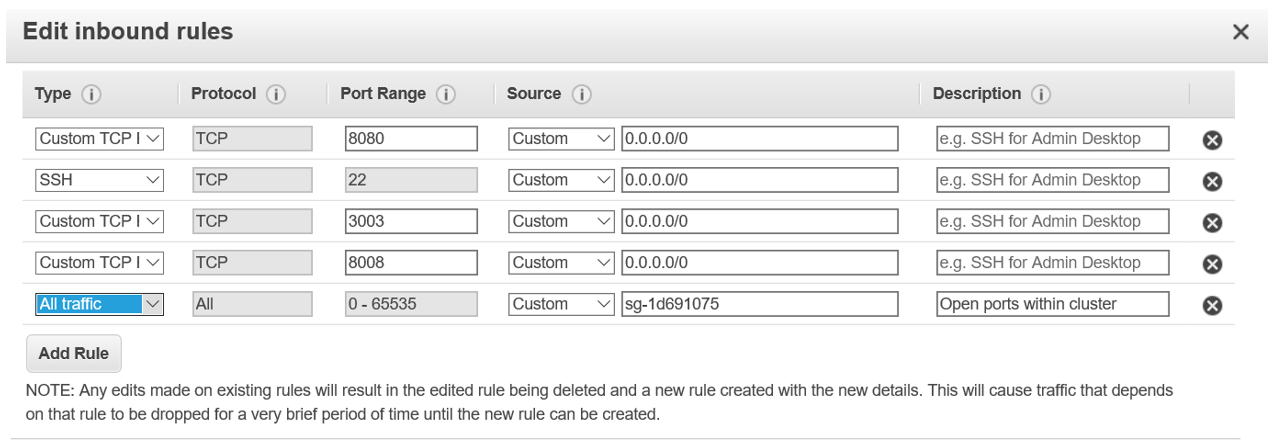

Fortunately, there is an easy way to open communication within the cluster but still protect nodes from IP addresses outside the cluster. We can simply create an inbound rule as shown below. With this technique the Security Group definition references itself, allowing all machine instances attached to the security group to communicate openly.

In Part 2 of this article, we'll move beyond network security and talk about security best practices related to Authorization & Authentication and Identity and Access Management at both the cloud provider level and within the HPC environment.

To frame a cloud security discussion specific to HPC, we borrow from a visual created by Cohesive Networks in their 2016 blog. We've drawn the layers a little differently to emphasize topics of interest to administrators deploying cloud-based or hybrid-cloud HPC clusters. In this three-part article, we'll walk our way through the layers pictured above.

In this first article, we'll focus on perimeter and network security. In Part 2, we'll discuss considerations around identity & access management (IAM) and suggest best practices to keep clouds secure. In Part 3, we'll cover addition measures to help secure instances and applications.

To make our examples concrete, we'll discuss security in the context of Amazon Web Services (AWS). The same concepts apply to other major public cloud providers also, but some of the tools and terminology are different. We'll start with perimeter-level protections provided by the cloud service provider.

Cloud provider edge protection

Distributed Denial of Service Attacks are one of the most common attack vectors on the internet. As the name implies, DDoS attacks make a website or cloud-resource inaccessible by flooding it with network traffic. DDoS attacks can occur at multiple layers in the OSI network model from the network layer to the application layer. Threats include UDP reflection attacks (network layer), SYN floods (transport layer), and DNS query or HTTP floods (application layer).If you're running a private HPC cluster in the cloud accessed by a closed community, DDoS attacks are probably not a big concern. If your HPC environment is presenting a public interface like a website, portal, or API as many increasingly do, DDoS attacks can pose a threat to your environment.

The good news is that most cloud providers offer robust protection against DDoS attacks. In AWS, customers benefit from automatic protections of AWS Shield Standard included at no charge for customers consuming AWS cloud services. This basic level of protection will be sufficient for many HPC users.

For HPC users building high-traffic, publicly accessible sites and using services like AWS Elastic Load Balancing (ELB), Amazon CloudFront, Amazon Route 53, or the AWS API Gateway to deploy a scalable API, AWS provides a whitepaper titled AWS Best Practices for DDoS Resiliency providing details on various DDoS mitigation techniques.

VPC level network configurations

The next layer of defense is at the network level. When HPC administrators deploy clusters to a public cloud (using tools like Navops Launch, AWS CfnCluster, or custom tools), instances are connected to a logically isolated virtual network referred to as a Virtual Private Cloud (VPC). While the cloud provider will attempt to make good default choices as instances are deployed, understanding how VPCs work is critical to securing your environment.Virtual Private Clouds can use either IPv4 or IPv6 IP addressing with classless inter-domain routing (CIDR). For illustration, I can configure a VPC in a region with an IPv4 CIDR block such as 172.31.0.0/16 (having up to 65534 IP addresses) where the first two bytes specify the network. If I have multiple HPC clusters, I might divide my VPC into up to 16 separate subnets (in this example) each with up to 4,094 IP addresses. For readers familiar with CIDR, I can do this by creating subnets 172.31.0.0/20, 172.31.16.0/20, 172.31.32.0/20, etc.)

For each VPC or subnet, I can configure details such as:

- A routing table that may include an internet gateway

- A network ACL that allows or denies traffic by type, protocol or port-range

- A DHCP option set (at the VPC level)

Router and Internet Gateway settings

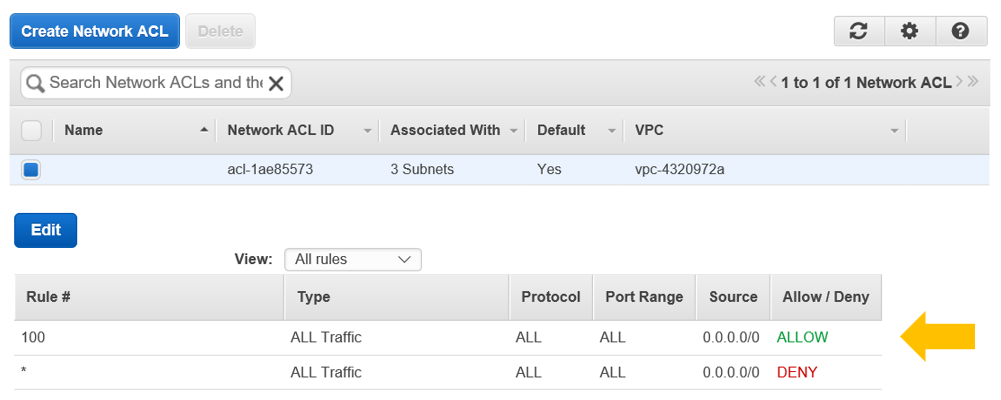

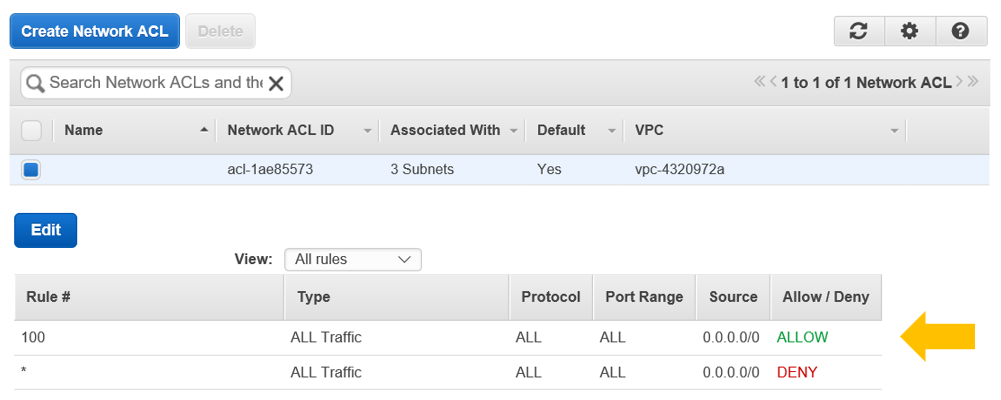

By default, a route from each VPC and subnet to an internet gateway is typically configured so that traffic can flow freely to and from the internet from attached machine instances. A default set of VPC-level Network ACLs (access control lists) is also created for each subnet. The default ACL settings are typically permissive as shown below allowing traffic to flow freely to all other networks from all sources. This makes the environment easy to set up and troubleshoot, but it's certainly not a best practice from a security standpoint.

In production environments, users may deploy multiple clusters on separate VPCs or subnets. It's worth taking some time to think about basic questions like whether each cluster needs internet access, and whether users on one cluster need access to other clusters different VPCs or subnets. The more you can limit access to individual VPCs or subnets; the more secure the environment will be.

Clusters will also be more secure if you don't associate an internet gateway with a VPC or subnet, but cluster users often need access to the public internet for legitimate reasons. A good fallback position can be to enable outbound traffic only using an egress-only internet gateway (for IPv6 traffic) or a NAT gateway (for IPv4 traffic). NAT gateways allow machine instances on a private subnet to connect to the internet or other AWS services but prevent actors on the internet from initiating a connection back to instances attached to your VPC. Once a NAT gateway is created, you can update the routing tables associated with VPCs or subnets to route traffic through the NAT gateway rather than the internet gateway supplied by default.

Consider segmenting different groups on separate subnets or VPCs. For common services needed by multiple clusters (license servers, Navops Launch server, shared file servers, etc.) consider putting these on their own VPC with no associated internet gateway reachable only by nodes on the VPCs or subnets that need to connect to them.

VPC Peering is another feature that may be useful to cluster administrators. VPC peering allows administrators to connect two VPCs with different IP addressing schemes and allow instances on each VPC to communicate as if they were on the same network to share data or for other purposes. VPC peering can be configured across AWS data centers or multiple AWS accounts.

Network encryption and VPNs

A common requirement in HPC environments is to provide a virtual private network (VPN) that tunnels IP traffic from a local data center to one or more VPCs operated by the cloud provider. VPNs are useful for cloud-bursting scenarios where capacity on a local cluster might be expanded dynamically into the cloud to handle peak workloads. It also useful because it provides client workstations on your network with seamless access to cloud-based cluster nodes. Websites such as CompareMyVPN allow you to find and choose between all the best VPN options you have at your disposal.AWS offers a variety of VPN solutions. With an AWS managed VPN a virtual private gateway is created and associated with a VPC. The virtual private gateway is paired with a customer gateway which is either a physical appliance or software in your local data center that is internet accessible via a static IP address or behind a NAT device. Those who are looking for virtual private networks might want to look at best vpn canada to help keep their network protected. A customer gateway resource is created in AWS that points to the on-premise gateway and routing tables are configured to allow traffic to flow through the VPN. The process for creating a VPN via the AWS web console (after creating the virtual private gateway and associating it with a VPC_is shown below:

Once you specify details like the ID of the virtual private gateway and address for the on-premise customer gateway, AWS will create a VPN connection as shown below automatically provide two internet accessible IP addresses as well as internal IP addresses for the tunnel endpoints.

Once the VPN tunnel is established, IPSec secured connections can be initiated from the customer gateway to cluster nodes attached to the VPC. Packets are automatically encrypted as they leave your data center and decrypted at the cloud end of the tunnel (and vice versa). Using VPN tunnels for network encryption with appropriate on-premise firewalls and appropriately secured VPCs and subnets helps secure your cluster from threats originating both inside and outside the cloud providers network. There are lots of different VPN reviews available by sites similar to makeawebsitehub so it's not going to be hard to find a solution that is suitable for you and your needs.

AWS Direct Connect is another way that customers can create a direct connection from their local data center to AWS (bypassing internet service providers) allowing secure communication via a standard Ethernet fiber-optic cable providing 1 Gbps or 10 Gbps transfer speeds.

Instance-level Firewall Settings (Security Groups)

We could have talked about instance-level firewalls in the context of instance-level security, but since this article deals with network security, it seems appropriate to cover it here. Most Linux administrators are familiar with firewalls. With on-premise clusters, firewalls are typically implemented by network elements like routers or by OS-level software that needs to be explicitly configured (iptables for example).In the cloud, firewalls are virtual constructs, implemented at the level of a virtual private cloud (VPC) controlling what traffic can enter and exit from each machine instance attached to the VPC.

Earlier we talked about how Network ACLs control what traffic enters or leaves a VPC or cloud subnet. Security Groups are similar, but these define firewall policies controlling traffic to and from a VPC at the instance level. Security Groups provide another layer of defense above and beyond VPC or subnet-level Network ACLs.

In HPC environments there may be tens or even hundreds of machine instances. Configuring firewall policies for each node would be tedious. Fortunately, a Security Group can be defined once and applied to multiple machine instances.

A sample Security Group for a Altair Grid Engine cluster is shown below. The Security Group is associated with the VPC that the cluster machine instances attach to and defines inbound and outbound rules for network traffic.

Applying a security group to cluster nodes makes the environment more secure because cluster nodes will only accept traffic on ports that are specifically authorized. For example, we allow external connections on port 22 for SSH, port 8080 for the Web GUI and a few other ports required for specific services. The ports you open will depend on your application environment, but in general, it is a good idea only to open ports that need to be open.

Administrators can make the environment even more secure by having different security groups applied to different types of cluster nodes. For example, head-nodes or master hosts might have different firewall policies than visualization nodes or compute hosts because different types of hosts expose different services.

A challenge with HPC clusters running workload management software (like Altair Grid Engine, MPI, NFS or other distributed applications is that open communication may be required between cluster nodes. Some applications negotiate ports to communicate on dynamically at runtime, so port numbers are not always knowable in advance.

Fortunately, there is an easy way to open communication within the cluster but still protect nodes from IP addresses outside the cluster. We can simply create an inbound rule as shown below. With this technique the Security Group definition references itself, allowing all machine instances attached to the security group to communicate openly.

In Part 2 of this article, we'll move beyond network security and talk about security best practices related to Authorization & Authentication and Identity and Access Management at both the cloud provider level and within the HPC environment.