Containers for HPC Cloud

Understanding the big three container platforms: Docker, Singularity, and Shifter

A few years ago, when the container hype was at its peak, one could have been forgiven for thinking that virtualization was all but dead. Microvisors were the new Hypervisors, and container orchestration platforms were all the rage. Containers offered advantages for scaled-out cloud services, but if you were running MPI applications on an HPC cluster, you might have wondered what all the fuss was about. Fast forward to today, and containers have made their way into the HPC mainstream. As HPC users embrace cloud computing and need fast, reliable application deployment and portability across hosts and clouds, the benefits of containers are clear.

With micro-services architectures, the watchwords are small, simple and separable. Application architects might deploy dozens or even hundreds of pint-sized containers per host managed by an orchestration framework like Kubernetes. In HPC by contrast, containers tend to be beefier, containing monolithic applications with pre-requisite libraries and system software in images often several gigabytes in size. HPC sites tend to run fewer large containers per host, and the challenge is less about orchestration and more about making containers work seamlessly with HPC tools, middleware, and other non-containerized workloads.

In this article, we contrast three HPC-friendly container solutions (Docker, Singularity, and Shifter) and offer some perspectives on what solution might make sense when.

A primer on containers

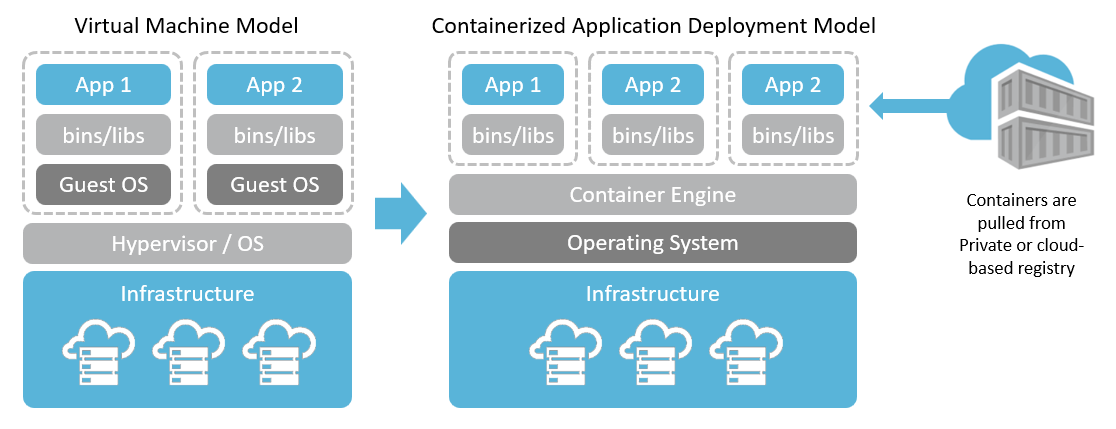

Most readers are probably familiar with the basics of containers, but for those new to the topic, we offer a quick primer. Containers are another way of packaging and isolating workloads on a shared host. Unlike virtual machines that leverage an OS-hosted or bare-metal hypervisor to support multiple independent operating systems, containers leverage OS-level virtualization taking advantage of Linux kernel features like namespaces and cgroups.

For Linux administrators, a container is best thought of as a collection of processes running on a shared Linux kernel. A privileged user on the host operating system sees all the processes, but from within the container, each container appears to run its own OS. Because managing containers involves simply starting or stopping processes on a running OS instance, the overhead is low. Unlike virtual machines that need to boot an operating system instance to start, containers can often be started or stopped in seconds depending on their size.

Containers in HPC

In HPC, the use case for containers is different than enterprise. While this may change in future, HPC applications tend to be monolithic as explained above. To the extent that applications exploit parallelism, they do so with multi-threaded applications taking advantage of multiple processor threads and cores, messaging libraries like MPI that enable fast message-passing for node-level parallelism (parallel jobs), or workload managers able to schedule large numbers of discrete jobs on a shared cluster. For HPC users the value of containerization is mostly around encapsulating applications and making them portable. Containerized HPC applications are emerging as a market with companies like UberCloud pioneering the idea of portable, containerized applications. So, without further delay, let’s meet the container platforms.

Docker

The idea of OS-level virtualization has been around for a long time, but the release of open-source Docker in 2013 was a game changer. Docker provided a standard set of tools to build and manage container images, a run-time and set of tools to manage containers, and an internet accessible repository called (Docker Hub). These innovations made it easy for developers to package and share containerized applications. Docker gained such popularity that the word has practically become synonymous with containers. In some circles, developers talk about dockerizing their applications. Extracting and running a Docker image is as easy as running “docker pull” followed by “docker run” on any host with a Docker Engine installed.

Docker Inc. provides both an open-source Docker Community Edition as well as well as commercially supported Docker Engine. Docker Enterprise provides the Docker Engine along with developer tools, registry services, tools for policy & governance, life cycle management, etc. Docker containers can be deployed on-premises, on Docker’s own Docker Cloud or various public clouds including Amazon Web Services, Microsoft Azure, Google Cloud, and others.

For users running Altair Grid Engine, enhancements to Altair Grid Engine have made it easy to run Docker workloads. Users can dispatch and run jobs in Docker containers from a specified Docker image on an Altair Grid Engine cluster. With this integration, Docker workloads are managed like any other job with support for suspended, resuming, and killing jobs, handing input, output and error files, limiting resource consumption, and managing job-level accounting.

One concern that HPC administrators sometimes express about Docker is that the Docker daemon on each host needs to run as root, potentially leading to security concerns. While true, security considerations have been addressed by the Docker team. Users can avoid potential issues by configuring the Docker daemon properly, and remapping root in the container to a non-root uid on the host operating system as described in the Docker documentation.

Singularity

A second container platform gaining popularity in HPC circles is Singularity. Singularity began as an open source project in 2015 led by Greg Kutzer or Lawrence Berkeley National Labs. While younger than Docker, Singularity set out to solve some challenges specific to HPC and has since been embraced by several high-profile labs. As of 2018, Singularity is estimated to have 25,000 installations including top HPC centers like TACC, San Diego Super Computer Center and Oak Ridge National Labs. In February of 2018, Sylabs Inc. was launched, founded by key Singularity developers. Sylabs offer Singularity Pro, a commercially supported version of Singularity along with a Singularity Registry Service similar to Docker Hub.

Singularity uses a single image file format containing all dependencies. This makes containers on Singularity a little more nimble than Docker containers. Docker, by contrast, constructs containers at run-time from layers managed behind the scenes by the Docker system. Each layer in Docker is a tar archive containing the files that comprise the layer. Singularity is flexible allowing users to use with Docker format images or images in Singularity’s native format.

Singularity also offers the advantage that containers run under the Linux user ID that launches them, sidestepping the security concern that some users have about the Docker daemon running as root on Linux hosts. Singularity workloads are such that they can be launched like regular Linux commands via scripts (singularity run analysis.img) making them straightforward to integrate with workload managers. Singularity also has advantages in running MPI applications. MPI-enabled Singularity images can be launched directly from the mpirun command line, and containerized applications have direct access to resources like GPUs and parallel file systems. While running Singularity on Altair Grid Engine is straightforward today, In July of this year, [Altair] and Sylabs Inc. announced a collaboration to improve further the integration of Singularity containers on Altair Grid Engine clusters.

Shifter

Similar to Singularity, Shifter emerged from the HPC community - specifically NERSC’s efforts to provide a scalable way of deploying containers in their HPC environments. Like the other container platforms, Shifter has its own image format, but Shifter images can be created from any of images in Docker, virtual machines or chos (a utility used to create a Scientific Linux environment).

When using Docker images with Shifter, images still reside in DockerHub (or a private image registry), but images are automatically converted to Shifter’s native format by a Shifter gateway. Like Singularity, Shifter side-steps concerns about root escalation attacks in Docker. Shifter containers run under the user ID of the user that launches them. MPI applications are supported with Shifter as well, but the implementation is MPICH centric.

| Comparison point | Docker | Singularity | Shifter |

| Orientation | Enterprise, Micro-services, general-purpose | Built for HPC, monolithic workloads | Built for HPC needs @ NERSC and large labs running Cray systems. |

| File format | DockerFile format | Native .img format but tools available to convert from multiple formats (tar, tar.gz, SquashFS, DockerHub) | Native Shifter file format (created from DockerHub, VM or CHOS images) |

| Registry | DockerHub | Singularity Hub and/or DockerHub | DockerHub via Shifter Gateway |

| MPI support | Altair Grid Engine simplifies parallel jobs on Docker | Yes, multiple MPI implementations | MPICH-centric, but others can be used |

| Infiniband support | Yes | Yes | Yes |

| Setup requirements | Mature ecosystem, easy to install | Also easy to install | A little more complex owing to the shifter gateway |

| Licensing | Open-source or commercial versions | Open-source or commercial versions | Open-source |

| Workload Managers | Strong integration with Altair Grid Engine | Easy to integrate with multiple workload managers, additional UGE support announced | Some documentation on GitHub, but integrations mostly left to users |

| Learning more | http://docker.com | http://sylab.io | https://github.com/NERSC/shifter |